|

| Yes, it is white with a pink cover, and I put it on the boxer. |

I finally caved in, or rather my better half did. Now I have an iPad (Ho, Ho, Ho). It's not a machine-gun but affords some other, equally amazing, possibilities, you can surf in bed, surf in cafes, and you can even surf on the loo. Amazing, I say! What I really want it for is drawing. For research-articles there is seldom any real use for drawing, but when writing reviews, presentations, and when teaching, it is indispensable.

Different lecturers and teachers have different approaches, and different students require different teaching strategies, but everything gets better with good pictures. For review-articles and formal presentations you need high quality illustrations, while for teaching I find it better to draw live, so that the students can follow along. There is of course some middle-ground, students that need original data and high-quality illustrations that they get hand-outs of, and formal presentations that can include free-hand drawing.

Anyway, these two ways of working need somewhat different programs, or Apps, as I have understood they are called. Before we start drawing however, one does need an implement. I got the stylish aluminium stylus from "Just | mobile". It has a solid feel, looks stylish (oh, no I said it again), and... well that's it really.

Now, to the actual drawing. Let's start with free-hand drawing that you can use just to show things. There is a multitude of simple drawing programs for the iPad, and you can also use a more advanced application for your quick sketching. The one I got was

Bamboo Paper from Wacom (that otherwise makes professional digitisers).

It has three features I like,

1: It organises drawings by notebooks. That means that all drawings and notes from one lecture is automatically connected. They can even be exported directly as a multi-page PDF for use as hand-outs.

2: When connected to an external screen it just shows the drawing area. The controls are only visible on the iPad.

3: The interface is really simple. You can draw, erase and undo/redo. Then pen has three different settings for line thickness and six colours (well, actually three since half the colours are shades of blue).

Let us turn to real drawing and the creation of publication quality illustrations. For this you need two things: a sketching program and a line-art/vector program. The first is necessary because that's how you, or at least I, develop and refine the concept. The second is what constitutes "publication quality", i.e. freely scalable line-drawings that can be output as EPS or PDF, depending on your publisher.

I found two programs for each function with slightly different capabilities. For sketching I got

SketchBook from AutoDesk (the maker of AutoCad) and

ArtRage. These are two very different programs. Artrage was created to simulate working with real media. You have a wide choice of paper and canvas variants (and can change after the fact), and an equally wide range of simulated media; from pencil to oil, water-colour and inks. The amazing thing with it is that it simulates how these media behave in the real world. Oil-colour mixes on the canvas, and water-colour bleeds.

Three examples from Artrage, oil and water-colour with photographs as originals, and a pencil sketch of Madicken sleeping in the office chair.

As a bonus it has a well designed ability to work with layers so you can put the sketch, background colour fields and shadows & highlights on different layers and work with them independently. It even has a special layer for a photograph or some other image you want to use as basis for your work of art. The interface is very clean, and at the same time powerful. There is a implement selector in the left corner and a palette in the right, if you leave them open they are single click interfaces. To say that I am happy with Artrage would be an understatement. It is an achievement in programming and design. I may never use paper again.

SketchBook is more of an honest computer drawing-program and has all the usual things, pencil, brush, air brush and so on, but it doesn't try to be oil on canvas. The interface is very clean and the most important things can be controlled with gestures (pencil type and colour, layer and undo/redo), but otherwise it is a two- to four-click interface. In my hands it is a lot like drawing with ink on paper. Not for absolute scale-drawing, but good for illustration purposes.

|

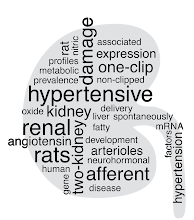

| Quick concept for the two kidney, one clip model of renal hypertension made in SketchBook. I used three layers, one for the sketch, one for colouring, and one for shadows. |

Something like that may be good enough, or we may want something a bit more stylised for publication. In that case we can use the sketch as a basis for a vector-based drawing. I got two programs rumored to be the best vector-Apps on the iPad:

iDesign and

Inkpad. iDesign is supposedly the more competent of the two, allowing true cad-like features like absolute scaling, and exact positioning and angling by numbers. However, it took me over a minute to find the undo-button. That's when I quit and tried Inkpad instead. There will be no review of iDesign at this time.

Addendum: I figured out how iDesign works. I had to make a more exact graph-like illustration, and Inkpad turned out to be completely useless since it just doesn't handle that level och precision. Even the undo/redo button was much easier to find once I figured out how the interface works. There are four extendable tool-strips, one per side, with all the frequently used commands and tools. There is a snapping-grid that increases in exactness with zoom, and can be set to scale. Each point and line can be exactly positioned by number: X, Y, height, width and rotation. Colour gradients are not as easy as in Inkpad (i.e. I cannot find it). In summary it is a CAD application on the iPad, and as such it makes you work in a more precise manner.

Inkpad just worked for me. The interface is completely self-explanatory. It does not have that many functions, you can basically draw paths and fill them. In addition filling with gradients is as easy and versatile as I have ever seen it done, simply marvelous. It is also easy to import one, or more, pictures to use as originals. Most importantly the undo and redo buttons are immediately obvious at the bottom of the page.

|

| Using the previous image as original it took me about 20 minutes to create this scalable version with smooth lines and even gradients in Inkpad. Looks professional, is what it does. It also has no personality, but that is what is expected from scientific illustrations. |

Although I have done mostly photography the last couple of years, drawing and painting were my original passions. In a fortuitous turn of events the sale of my camera allowed me to return to the original fold, with new and improved tools. The down side is that the quality of the photographs has degraded significantly.